Hinton on Artificial Intelligence (AI)

Geoffrey Hinton, often referred to as the "Godfather of AI", just gave a talk at a conference a few days ago that is publicly available. I picked up a few thoughts from him that got me thinking.

This is MadeMeThink.xyz – A weekly publication with thoughts on disruptive Technologies (e.g. AI | Web3 | Metaverse), their Applications, Opportunities, and Impact. Written by Prof. Thomas Metzler, Ph.D.

This MadeMeThink last week…

Geoffrey Hinton on AI

Geoffrey Hinton is a cognitive psychologist and computer scientist, most known for his work on artificial neural networks. He spent a decade working for Google on AI, then resigned from his job in 2023 with much coverage in the press, attributing his resignation to concerns about the risks of artificial intelligence. Hinton is an interesting thought leader, often referred to as the "Godfather of AI" and he gave a talk at a conference a few days ago that is publicly available (see video below). Hinton addresses several thematic areas, three of which I would like to discuss.

AI and the existential threat

Hinton: “There’s 99 very smart people trying to make [AI] better and one very smart person trying to figure out how to stop it (…) We're entering a period of huge uncertainty nobody really knows what's going to happen (…) if [AI] get to be smarter than us which seems quite likely and they have goals of their own which seems quite likely they may well develop the goal of taking control, and if they do that, we are in trouble.”

This quote got me thinking about how and if AI development should be regulated. In the world of legislation and regulation, there are primarily two models often discussed: the Precautionary Principle and the Risk Assessment Model. The Precautionary Principle, often employed in Europe, stipulates that PROACTIVE steps should be taken to safeguard public health, the environment, and other areas of concern if there's a chance they could be threatened, even when the full scientific understanding might not be complete.

In contrast, the Risk Assessment Model, widely used in the United States, requires a higher degree of scientific certainty and clear proof of potential harm before regulations are imposed. Under this model, regulators tend to analyze and quantify the risk and benefits BEFORE implementing rules, leaning more towards innovation and progress unless substantial, demonstrable harm is anticipated.

As someone who has been entrenched in the start-up culture, I've always found myself more aligned with the Risk Assessment Model. It's a framework that promotes innovation, allows for calculated risks, and doesn't let the fear of unknown stifle potential progress. But is this approach the right one in the field of AI? Here, perhaps, the European Precautionary Principle might offer a valuable viewpoint, emphasizing safety and preemptive measures against potential harms.

Nevertheless, one question remains: even if the precautionary principle is adopted, would it be effective without a globally unified approach? If some countries implement stringent AI regulations while others have a more laissez-faire attitude, can we truly prevent the potential risks associated with AI? Maybe it's not about choosing between precaution and risk assessment; instead, the real challenge is coordinating a global consensus that balances innovation with security. A shared vision for AI that is globally accepted could be the key to unlocking its immense potential while also safeguarding our future.

AI and social and economic inequalities

Moderator: “Is there anything that we can think of (…) that the machines will not be able to replicate?”

Hinton: “We’re wonderful, incredibly complicated machines, but we’re just a big neural net. And there’s no reason why an artificial neural net shouldn’t be able to do everything we can do (…) If you get a big increase in productivity like that the wealth isn't going to go to the people who are doing the work or the people who get unemployed, it's going to go to making the rich richer (...) it's just what happens when you get an increase in productivity, particularly in a society that doesn't have strong unions.”

So how will the wealth generated by AI be distributed? There will be a lot of difficult questions to answer, especially since many political parties are taking ideological positions: Machine tax? Unconditional basic income? Redistribution of wealth? These are topics that are probably viewed rather critically in capitalist societies today, but which could become necessary depending on how progress is made in the field of AI. There is a high probability that many jobs will be replaced by AI in the future, just think of customer service/support staff (see also impact of AI in different use cases). And the argument that the AI transition - similar to past technological transformations - will only shift jobs, is something I see critically. Society needs to start thinking seriously about how to handle this transition.

AI and the increased risk of war

Hinton: “[AI and Robotis are] going to make it much easier for example for rich countries to invade poor countries at the present there's a barrier to invading poor countries which is you get dead citizens coming home. If they're just dead battle robots that's just great, the military industrial complex would love that”

So AI is not expected to just turn on us at some point and kill us all, but before that, AI will ensure that we kill each other more efficiently 🤯 I've had that thought a few times when looking at Boston Dynamics' progress with their robots (see video below). And it makes perfect sense to me what Hinton is saying here. It's only a matter of time before we see these technologies in armed conflicts. Or rather, these technologies are already being used, but not yet in the form of combat robots. I read an article the other day about the Black Hornet Nano Drone that also uses AI for autonomous navigation, to react to changing environmental conditions, and to return to its dock without constant human input. Furthermore, interesting, but also somehow scary: “The drone's acoustic and visual signature is rated as best-in class (…) it can be 10 feet (3 meters) away from a person without being heard.” Before you start looking for a Black Hornet Nano Drone to fly around your place, here's a showstopper: One Black Hornet Nano Drone costs $195,000 👀.

The following is the Boston Dynamics robot Atlas in a setting where he is helping a handyman. For me, it's pretty clear that this technology will end up in an armed conflict at some point.

Personal update…

On/Off-Holidays

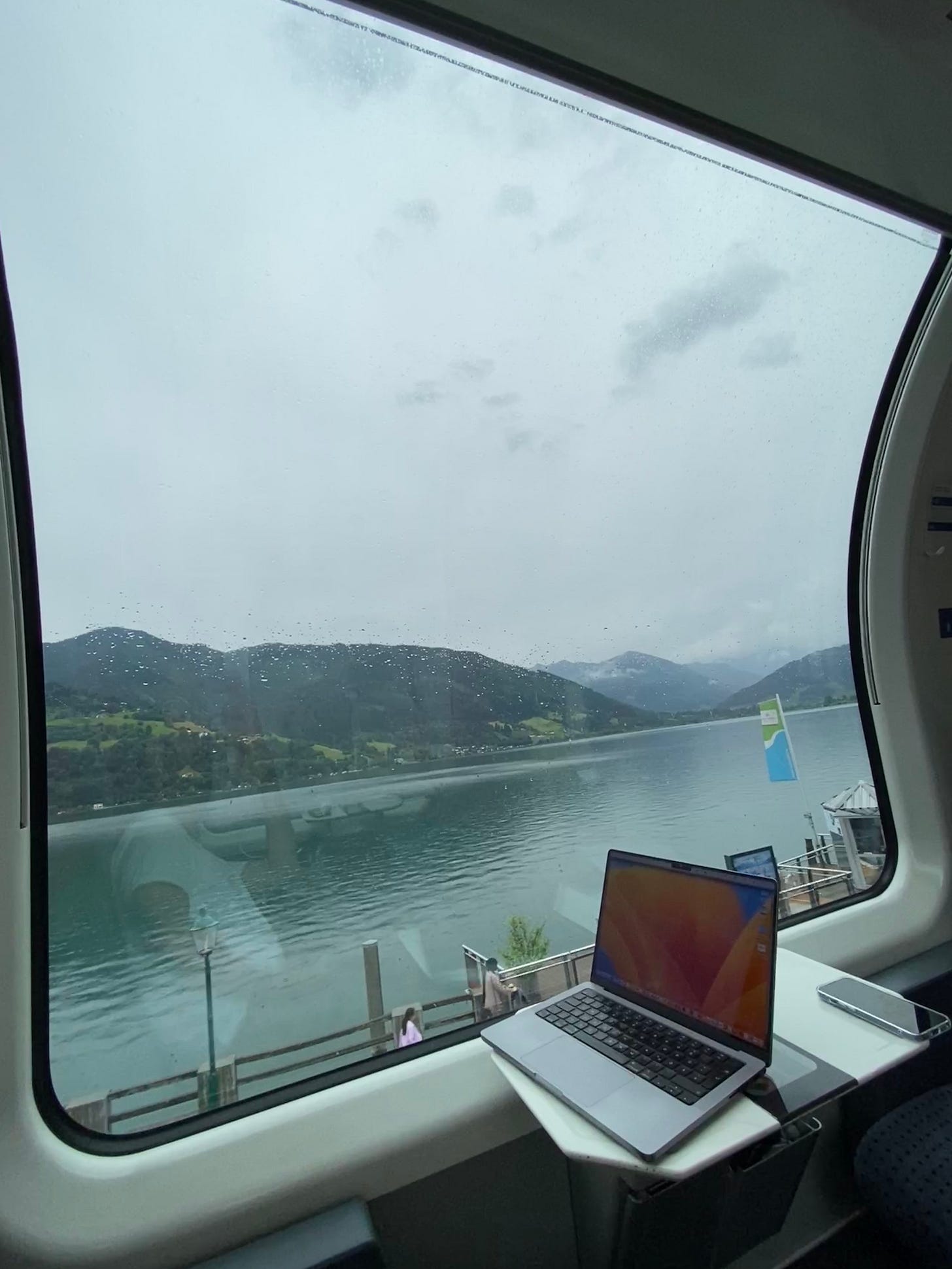

I am still on vacation, but in the meantime somewhat on/off, because the weather here in Austria is very rainy, and therefore I work in between on rainy days. This week I tried to escape the weather and travelled to Vienna, a train journey of about 7 hours from Bregenz, which leads among other things through the Alps, a very scenic route. Not this time, but on my last trip through Austria, I sat in a Swiss train set with panoramic windows (see picture below), that was quite spectacular and nice to see the landscape during the ride… although the work I actually wanted to do on the trip suffered a little 🤷♂️.

Disclaimer: The thoughts published in this publication are my personal opinions and should not be viewed as investment advice. I am not a financial expert. My specialty is entrepreneurship, marketing & innovation. Readers should always do their own research.